June 27, 2025

BlogCIO Talk: What Is AGI and Why Should You Care?

The progress of artificial intelligence in the last few years, we’d all agree, has been phenomenal. Five years ago, trapped in the misery of Covid, none of us foresaw the emergence of ChatGPT and its follow-on effects – that Microsoft would invest $13 billion in OpenAI, that Nvidia would be approaching a $4 trillion market cap, that tech vendors are scrambling to build data centers. If everything’s about AI these days, then capex truly has become strategy.

But can machines ever approach the human capacity for intelligence?

It’s not – at all – an abstract question. Because if OpenAI ever achieves Artificial General Intelligence (AGI), as it’s called, then, contractually, they are no longer bound by the exclusivity clause in their contract with Microsoft.

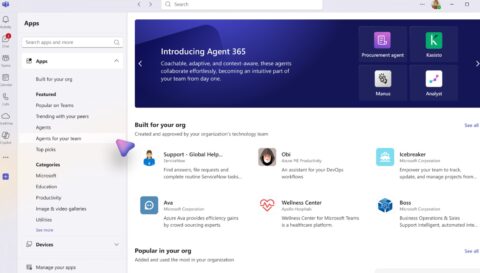

If you’re Satya Nadella, that’s a big deal. Microsoft bases much of its market leadership in AI on its exclusive access to OpenAI models, which underly all those Copilots and Copilot Studio, and are available for use in Azure.

What is AGI?

A very good question, and one which has many possible answers. Some argue that it’s passing the famous Turing Test: which is, briefly, that conversation with a computer is indistinguishable from chatting with another person. By that measure, we may have already achieved AGI.

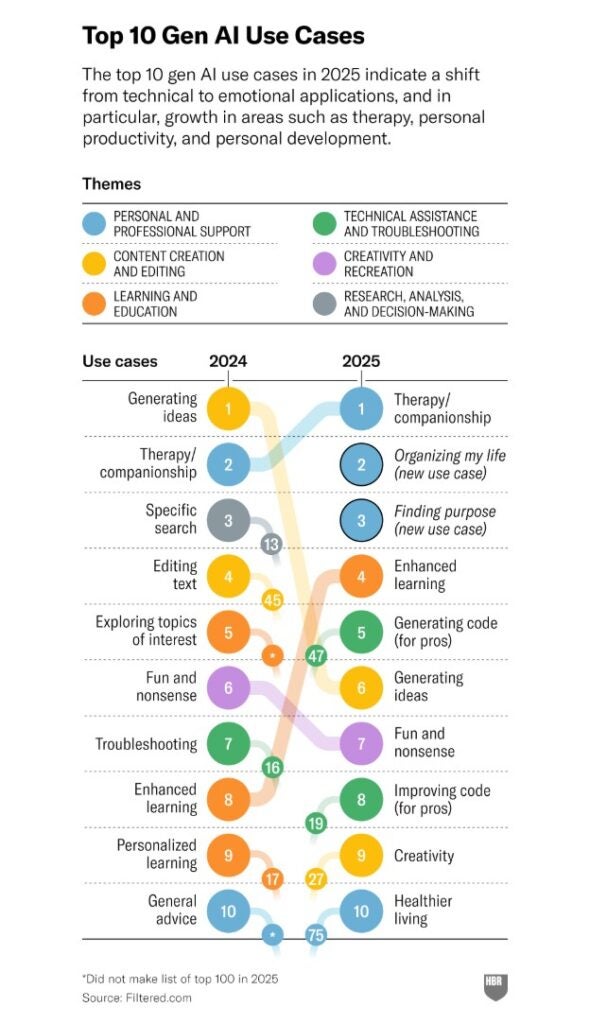

Another test (called generality) involves how broad a range of topics an AI can be used for. An LLM trained on the entire internet can chat with you about much more than one trained just on your local enterprise data. Here again, we seem to be doing pretty well: a recent Harvard Business Review study showed that the top use case for AI in 2025 is…wait for it…therapy and companionship. Numbers two and three: “organizing my life” and “finding purpose.”

Check it out:

Top 10 GenAI Use Cases

Harvard Business Review

In 2023, Mustafa Suleyman (now CEO of AI at Microsoft) and Michael Bhaskar (AI strategy and communications at Microsoft) suggested a more quantitative test: give an AI $100,000, let it invest, and turn that sum into $1,000,000. Such a task involves complex reasoning, analytics, and forecasting.

We’re not there yet.

So which is it? It matters, because Microsoft and OpenAI are furiously negotiating over whether the latter’s models can be more broadly available, whether OpenAI can go public, and how much Microsoft’s stake in the company will be.

How Do We Measure AI and AGI?

To know if we’ve achieved AGI, we have to be able to measure it.

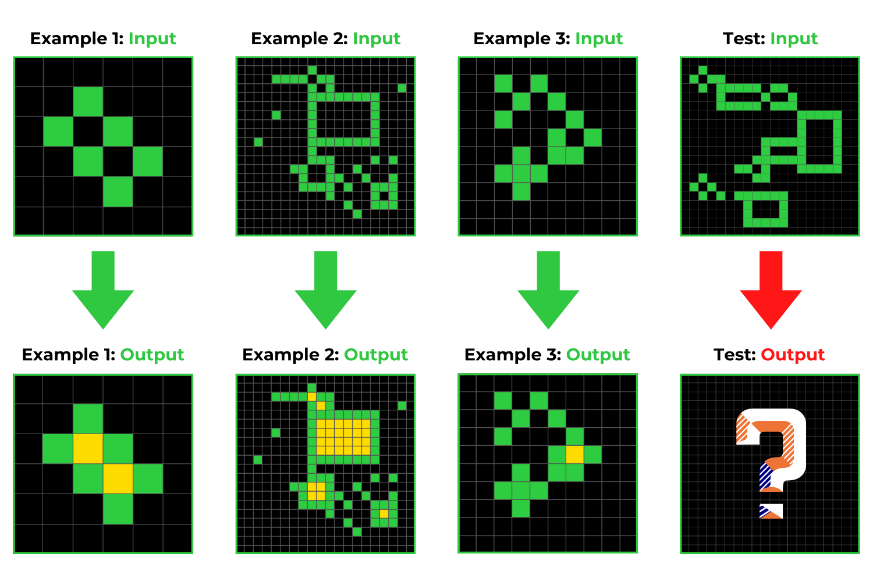

Several organizations have proposed tests. Lab42, headquartered in Davos, Switzerland, offers a battery of reasoning tests to measure just how “intelligent” an AI can be. Here’s a sample problem from their website:

Google’s DeepMind approaches the problem differently. It suggests a 2×2 matrix classifying “Levels of AGI,” in effect describing an AI as either narrow (can only handle certain types of problems) vs general (can handle a wide range of problems) on the one axis; and performance, that is, the extent to which an AI can mimic humans, on the other. A Level-0 application could be (for example) a calculator, with no AI; Level-5, somewhat terrifyingly, is termed “superhuman,” exceeding human capabilities. (Luckily, we haven’t achieved that yet.)

And there are lots of other possibilities. We could simply give it IQ tests, or current events tests, or empathy-emulation tests, or all of these and more.

Ultimately, I suspect, it will come down to a value judgment based partly on how well a given AI performs on quantitative tests – and how human it “feels.”

Which will make that pesky clause in the Microsoft-OpenAI contract difficult to resolve.

Efficiency and Innovation

Today, organizations spend large sums of money on fundamentally two types of initiatives: innovation and operational efficiency. (It’s true when you think about it.)

Every AI use case I’ve seen is about operational efficiency. AI can make your employees better writers, better coders, in general more productive – in many cases much more productive (full disclosure: I used AI to research this article – but not to write it.)

I’ve yet to see an AI that’s truly innovative. It’s difficult to imagine that AI could, independent of any human assistance, come up with a revolutionary product like the iPhone, for example. Sure, it could make an existing product better, improve production times, and so on – but true innovation?

To me that’s true “intelligence” and that’s our job, and always will be.

Progress, Determinism and Probability

Lastly: many have commented that our march to AGI has slowed, that progress in AI has plateaued. True, training and inferencing have become more efficient (see previous paragraph): Nvidia’s chips have gotten faster and denser, data centers are springing up everywhere (remember, capex=strategy) And OpenAI and others are testing new techniques like reinforcement post-training fine-tuning (basically you tell the LLM the right answer and it adjusts its weights accordingly).

Thus with continuing incremental improvements and the economics of scale, on the whole things arguably will gradually get better and perhaps cheaper (we’ll see).

But let’s remember: LLMs today are fundamentally probabilistic: they use advanced statistical algorithms to infer what the next best word or next best sentence in a response to a prompt might be. (Is post-training RL “cheating?” You be the judge.) That’s very different from how traditional, deterministic software applications work, in which you are guaranteed the same output for the same input.

In my opinion, what’s missing in current LLMs is a solid grounding in common-sense knowledge. “A dog is a mammal” – everybody instinctively knows that. Not an LLM, though – it reasons over its training base to determine that statement’s probability. It’s usually going to get it right – but it’s not guaranteed.

Therefore I suspect that the next major leap in AI will occur when LLMs can refer to a systematized ontology of indisputable facts to reduce probabilistic errors and hallucinations.

Net: it may be a while before an AI can truly pass as human.

Microsoft and OpenAI

Let us know!

Yes, we’ve digressed (but wasn’t it fun?). As I write this, Microsoft and OpenAI are trying to resolve their differences – who will win? Does it matter? What do you think?