August 15, 2025

BlogCIO Talk: What Open-Source Models Mean to Enterprises

By Barry Briggs

Recently OpenAI open-sourced two Large Language Models (LLMs); seemingly moments later, those models were made available on Azure AI Foundry and on the AI community website Hugging Face.

What are open-source language models and what do they mean for enterprise usage and applications? And what is driving the urgency some feel in releasing open-source models?

Weight a Minute!

LLMs, at their core, are based on multi-layer neural networks. Each little circle in the (highly simplified) diagram below represents a “neuron,” a small amount of code; typical LLMs have hundreds of billions or even trillions of these – which is why highly parallelized GPUs are well-suited for LLM workloads. The key element of neurons is the “weight:” how much importance the model places on the result of each calculation in each neuron.

Think of the inferencing process as solving a large mathematical equation with billions of variables each “weighted” by a number, where A, B, and C and so on are the weights:

Here’s the point: the weights are the secret sauce, somewhat analogous to the source code, of LLMs, the core IP – and thus the models behind OpenAI’s ChatGPT were closed-source, specifically meaning the weights used in the 175-billion parameter model were not disclosed.

(There’s much, much more to it of course: current LLMs owe much of their efficacy to an epochal paper published in 2017 called “Attention is All You Need,” which described how data is consumed and transformed in models – hence “Generative Pretrained Transformer” or GPT. But I digress…)

Customizing Models

Enterprises quickly realized that LLMs could be incredibly powerful and useful within their applications and environments. But how to safely connect or integrate an LLM with potentially sensitive enterprise data?

RAGs

One of the first, and still perhaps the most prevalent approaches is called RAG, or Retrieval Augmented Generation. RAG apps, as they’re termed, connect models to data via search techniques.

Here a model (hosted within the organization’s security boundary, such as an Azure tenant) parses the user’s question, extracts relevant terms, queries the connected HR application, and then formulates a natural-language response.

Here a model (hosted within the organization’s security boundary, such as an Azure tenant) parses the user’s question, extracts relevant terms, queries the connected HR application, and then formulates a natural-language response.

RAG apps are conceptually straightforward, relatively easy to create, and, if properly developed, secure. They are particularly useful for rapidly changing data such as news. However, the search process (which can involve multiple steps) can introduce latency and if the retrieved information is long, such as a multi-megabyte PDF, it can add expense (since costs are based on token count) and even overflow the LLM’s context window.

Fine Tuning a Closed Model

Fine tuning allows organizations to adjust the internal operation of the LLM itself. In fine tuning, developers “feed” enterprise data to an LLM (against, if using Azure, hosted inside the organizational tenant) having the effect of changing the LLM’s weights. That in turn (typically) creates a new instance of the model.

Fine tuning a closed-source model involves uploaded text or images in specific formats to the model via an API. It’s useful for scenarios in which specialized terminology such as medical or legal terms are needed, or enterprise-specific jargon such as product names and descriptions, equipment manuals, scientific writing, proprietary terminology, and in general in scenarios where the data does not change frequently. Morgan Stanley uses a fine-tuned LLM based on GPT-4 to assist its financial advisors with complex questions.

However, fine tuning closed models can incur costs – invoking the fine-tuning APIs can be expensive, and inferencing fine-tuned models generally comes with additional cost.

Finally, and perhaps most importantly, closed models such as GPT-4o cannot be hosted locally, that is, on-premises, precluding a number of important use cases: meaning that their use incurs cloud costs.

The Emergence – and Importance — of Open-Source Models

Open-source models are freely available, downloadable, and, most importantly, expose their weights directly for fine-tuning. In the last few years we’ve seen, perhaps somewhat surprisingly given the investment required to create them, numerous “open-source” models appear.

Open-source models can be fine-tuned (a process not dissimilar to training) locally on customer hardware – even on your PC – so that experimentation, testing, and deployment can happen inexpensively. Software encodes the input data (say, reference material of some sort) and then adjusts the weights of the model.

Thus, for example, dozens of fine-tuned LLMs have cropped up in nearly every aspect of healthcare and medicine (see a survey here); one, called Clinical Camel, uses a fine-tuned version of Meta’s LLaMa-2 model as a diagnostician, with impressive results. Other use cases include LLMs in edge computing, financial fraud detection, smart cities, eLearning, and manufacturing, among others.

While OpenAI published arguably the first open-source model, a reduced version of GPT-2 in 2019, it has since fallen behind: a tsunami of open-source models, many from China, have emerged. Indeed, when open-source DeepSeek (from Chinese firm High-Flyer) launched earlier this year with performance comparable to OpenAI’s latest models, markets briefly tumbled.

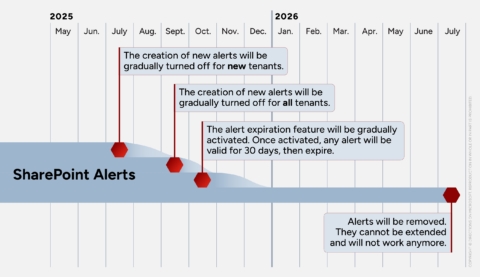

Which is why, finally, OpenAI’s release of open-source GPT models is significant, and why Microsoft was quick to incorporate them into Azure AI Foundry. Note, in the chart above from the (extraordinary) benchmarking site Artificial Analytics, that prior to gpt-oss’s launch, the top right quadrant would have been primarily populated with Chinese models: Alibaba’s Qwen, Minimax, DeepSeek, and ZAI (GLM).

The Geopolitics of It All

As early as 2017, China’s State Council declared AI a national priority. Many believe its development and promulgation of open-source models enables “soft-power” politics in developing countries that would otherwise be unwilling to pay for higher-priced models.

And the efficacy and popularity of these models has (some would say finally) gotten the attention of American government and industry. In rapid order came the White House’s “AI Action Plan” on July 23rd, the release of OpenAI’s gpt-oss models to great hoopla just days later, Microsoft’s announcement of their availability on AI Foundry, and the creation of the ATOM Project (American Truly Open Models) shortly thereafter (full disclosure: I am a signatory to ATOM).

Open, But Not Necessarily Free

(Microsoft sources: gpt-oss; gpt-5.

*Input charges may be less if prompts reused or cached.)

Now, squirreled away at the bottom of Microsoft’s announcement is the pricing of the gpt-oss models: that’s right, on Azure, the open-source models are not free! The larger of the two models costs $0.15/1 million input tokens and 60 cents per million output tokens; the smaller model, which can run on a single VM, is charged based on the VM cost. But – and here’s the deal – as we’ve said, these models can be downloaded and run for free on customer hardware.

Of course, OpenAI and Microsoft want to maintain some differentiation between open-source and premium, paid-for models; GPT-5 (on Azure, nearly 10x as expensive as gpt-oss, and not downloadable) supports many more features, has been extensively red-teamed, and is integrated with AI Foundry’s model router, a cost-saving feature.

What to make of all this?

A while back, I made the claim that models are increasingly commoditized. And with the emergence of open-source models customers can fine-tune them to meet the specific needs of their scenarios.

All this means there’s lots of choice, and customers will have decide which upon which criteria they choose their models. Will it be on the basis of cost? The ability to download them? The use of Responsible AI guardrails? National origin?

As is the case so often the answer is: it depends.

Think I need some fine-tuning? Drop me a line at bbriggs@directionsonmicrosoft.com.

No models were harmed in the creation of this blog post.