Updated: April 8, 2024 (November 15, 2023)

BlogMicrosoft bets on its own chips to boost Azure, AI workloads

Microsoft finally is going public on November 15 with one of the industry’s worst-kept secrets. For years, Microsoft has been rumored to be developing its own chips, including some based on the Arm architecture. Today at the company’s annual Ignite conference, officials are talking about what they’ve built and how Microsoft expects to use these chips to improve its own and its customers’ performance — and, hopefully, at some point, reduce both of their costs.

On Day one of Ignite, Microsoft is showing off its “Azure Maia” AI accelerator chip designed for cloud-based training and inferencing for AI workloads, as well as its “Azure Cobalt” chip based on Arm, meant to improve performance, power efficiency, and cost for general-purpose workloads. These chips will be incorporated into Azure’s infrastructure in 2024.

Officials said the in-house designed Cobalt 100 CPU, the first of a planned series, is a 64-bit 128 core chip that delivers up to 40% performance improvement over current generations of Azure Arm chips. Company officials said Cobalt 100 already is being used, in test, to run parts of Teams, Azure Communications Services, and Azure SQL Database.

Besides using Cobalt 100 in-house, Microsoft plans to make the ASIC Cobalt VMs available to customers for their own use some time in 2024, officials said. Microsoft has offered Arm-based VMs to Azure customers since 2022 via a deal with startup Ampere Computing, but these VMs were designed to run Windows 11 and various Linux distributions, specifically.

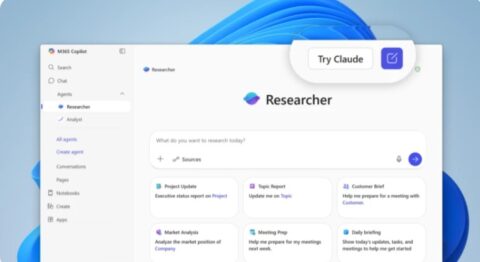

Microsoft execs said they have been testing Bing Chat (the consumer AI assistant service just rebranded to “Copilot”), Microsoft’s Phone AI services, as well as GitHub Copilot on the Maia 100 accelerator chips. Plans call for Microsoft to support not just first-party workloads, but third-party ones, like OpenAI’s ChatGPT 3.5 Turbo, and more on Maia, officials said.

More than just chips

In addition to showcasing its own silicon, Microsoft is making generally available as of today its Azure Boost system that is designed to speed up storage and networking by moving those processes off host servers onto customized hardware and software. It also plans to add AMD MI300X accelerated VMs to Azure that are designed to speed up AI workload processing, and it is delivering a preview of the NC H100 v5 VM series built for NVIDIA H100 Tensor Core GPUs, meant to improve mid-range AI training and generative AI inferencing.

Before Ignite this week, Microsoft already had been making numerous investments in Azure infrastructure that centered around the company developing its own chip, server, rack, networking and other physical components (rather than buying them off-the shelf). Years ago, it open-sourced its “Project Cerberus” cryptographic microcontroller, as well as its “ Project Olympus” datacenter server design.

In 2022, Microsoft bought Lumenisity, a British startup that was working on a new form of optical fiber, called “hollow core,” designed to improve long-distance data-transfer speeds. In 2023, it bought Fungible, a developer of data processing unit (DPU) technology which can be used for a variety of network interface card enhancements.

Microsoft also has been pioneering work in two-phase liquid-immersion cooling. Microsoft implemented this technology in its Azure datacenter in Quincy, Wash., starting in 2021.

In other Azure-related Ignite news, Azure AI Studio, which Microsoft originally announced at its Build conference in May 2023, is now in public preview. Azure AI Studio provides a UI to help create customized AI-enabled chats, including enhancing the chat with organizational data. Windows AI Studio, a complementary product “available in the coming weeks,” will allow developers to run their AI models on the cloud in Azure or on the edge, locally on Windows.

Related Resources

Ignite 2023: Microsoft introduces new chips, Azure infrastructure

Microsoft hardware acquisitions target its datacenter infrastructure (Directions members only)

To cool datacenter servers, Microsoft turns to boiling liquid

From 2022: Azure Virtual Machines with Ampere Altra Arm–based processors—generally available

From 2020: Microsoft is designing its own Arm chips for datacenter servers: Report